Savannah Thais is a postdoctoral researcher at the Princeton Institute for Computational Science and Engineering. She grew up in the Appalachian region with parents who prioritized higher education. Thais got her bachelor’s in math and physics at the University of Chicago, fascinated by how math can describe the universe. During her Ph.D. program at Yale, she worked on machine learning techniques for use at the Large Hadron Collider. As she attended machine learning conferences, Thais realized her interest in creating more intelligent models that can address on-Earth problems like opioid abuse and COVID-19 vaccine distribution. Thais’s postdoctoral research aims to create more targeted machine learning algorithms attuned to specific problems.

DR: How did you become interested in machine learning?

Thais: During college, I was fascinated by particle physics because it is the foundation of the universe, the building blocks of everything that exists. Trying to understand how those particles came to exist, and how they interact with each other to form matter, was the most interesting thing I’d ever heard. And I was intrigued by weird problems in particle physics. For example, we don’t have a quantum model of gravity, even though gravity is one of the first things you learn about. The machine learning aspect came in when I got my first position working with the Large Hadron Collider (LHC), the most powerful particle accelerator in the world, to study the universe.

DR: How did working on the LHC spark your interest in machine learning?

Thais: Looking back, I worked on a pretty basic thing with the LHC ̶ using neural networks to look for rare physics processes. I started going to machine learning conferences and became curious about what it can do beyond physics experiments. I wanted to understand how machine learning algorithms work and can be used for problems that require smaller, more intelligent models. A big trend is to build increasingly deeper, larger networks, which can be a bit wasteful. They have a large environmental impact because of the resources required to run such a big model with millions of learnable parameters. Only large tech companies can even study models that large because of the computing resources they require. We can be smarter about how we create these algorithms so that they are more attuned to a specific problem instead of just growing in complexity.

DR: What sorts of problems could benefit from better targeted machine learning algorithms?

Thais: People like to view machine learning and artificial intelligence (AI) as a purely scientific field, but the reality is that it’s embedded in so many critical societal systems already. It’s used to make decisions about resource allocation, healthcare, who's in jail and who’s released on bail, who views job ads and gets through the hiring process – any kind of decision you can imagine probably uses or has used AI. Machine learning is just another way to apply math to understand the world. Because it’s becoming so ubiquitous in societal applications, it’s affecting how decisions are made that impact us all. Sometimes, using these giant algorithms, we don’t fully understand what went into those decisions.

DR: How could your research on machine learning shape public policy?

Thais: Artificial intelligence is a field with compelling human interest and, as such, must be viewed as a profession with fiduciary duties to the public. The larger you make the models, the harder it is to understand why they generate a particular decision. This prevents the transparency and interpretability that are key in public sector decision-making. We need these machine learning systems to be auditable – if you don’t get a loan, or released on bail, or a job interview, you should know why that decision was made. We have the notion that a mathematical function can’t possibly be biased. But if you’re training it on historical data that’s biased, that plays into it. The algorithm may have high accuracy, but not be producing fair outcomes. I see a disconnect between how some researchers view our field and how it’s actually been used in the world. I’m interested not only in machine learning, but in activism, public policy, and public service.

DR: How can machine learning researchers help bridge the disconnect?

Thais: It’s critical that everyone in the machine learning space is aware of these issues. Even if you’re not working with humans, researchers need to get involved in the ethics of AI and understand the uncertainties in the models. It’s everyone’s duty because of the widespread and growing use of machine learning for decision-making that impacts people, including in life-or-death situations. While a physics researcher may be studying particles for now, we know that not everyone stays in academia. Some grad students and postdocs will leave and go to work for these big tech companies. So, there’s a big chance that at some point you’ll work with human or societal data, and it’s better to engage with AI ethics and be prepared.

Savannah Thais. Credit: Kolby Brianne.

DR: Like the recent issue that has emerged around facial recognition algorithms?

Thais: Yup, the first problem uncovered was that a lot of those algorithms don’t perform well on nonwhite and nonmale faces because the datasets used to train them were constructed with a bias towards white male faces. This led to problems like software not even recognizing a face if the person was nonwhite. It also leads to a lot of misidentification. One application is predictive policing using security camera footage to find people accused of crimes. A study using video footage from Congress (mis)identified several prominent politicians as professional criminals. We must think about how we can specifically check for bias, such as testing facial recognition on diverse groups of people before using it.

DR: What are your hopes for this intersection of machine learning and AI with equity?

Thais: I advocate for a more interdisciplinary and intersectional approach. There are lots of issues that can’t just be solved mathematically. Are the people represented in the training data opting in to have their information be used for the study? Even if you build an unbiased system, it could be sold by Facebook to the military and used to target drone strikes. Is that ethical? If you put your photo on Facebook, are you consenting to having your data used to kill people? And what about how systems are deployed? Are they used equitably, or to monitor the parts of society already marginalized? Many facial recognition systems have been predominantly used in minority areas.

DR: How do you tackle these policy issues?

Thais: We need to be mathematically unbiased, but also think about who’s involved in the process of deciding on acceptable bias. Even if you’re building something you define as unbiased, we’re still deciding what biased means. To be totally unbiased, we would need a deterministic model of the universe, or at least of Earth. We can’t even do that at a particle physics level, let alone a societal level. I push for people to work with social scientists, legal scholars, and the communities that we’re studying and deploying AI in. Who’s in the room when decisions are being made? All of these stakeholders should be involved in the conversation. We should promote technical literacy so that people can make informed decisions about being part of the AI systems.

DR: Do you have examples of good practices in this regard?

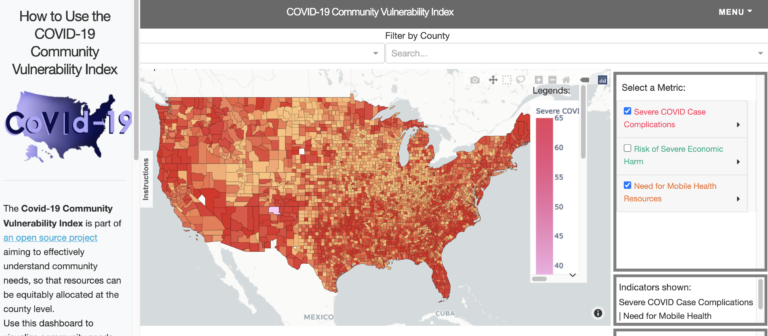

Thais: Stemming from an initiative called Science Responds, I founded Community Insight and Impact, whose mission is to empower communities through equitable, responsible data analytics. Our kick-off project was for the COVID-19 pandemic. We wanted to pinpoint areas where mobile health resources would be the most impactful, specifically for people expected to be hardest hit economically based on a range of factors ̶ service jobs, self-employment, health status, etc. In public health, lots of socioeconomic factors are interlinked. From the beginning I was adamant about bringing in team members from outside of data science. We got people from urban planning, public health, sociology, mental health research, and community advocacy groups. The COVID-19 Community Vulnerability Index is a site that shows data on community vulnerabilities around the pandemic county by county, a tool for equitably allocating health care resources. I’m excited to be one of the grant recipients for JustOneGiantLab, which will allow us to continue to support vaccine distribution equity and economic recovery from COVID-19.

DR: What other sorts of issues can be addressed through this sort of big science collaborative?

Thais: We’ve expanded from our initial focus on COVID-19 to helping Planned Parenthood allocate resources for sexual health education. The goal is to target resources in a way that prevents outcomes most people agree we don’t want, like increased rates of sexually transmitted infection and pregnancy in teens. Here too, we need to be careful that we’re not making unfounded assumptions. For example, if we see high pregnancy rates, the causes and solutions will differ depending on the underlying variables, including policies and religious beliefs. That’s why we must have interdisciplinary teams. We could uncover something statistically and publish a paper about it, but miss the causal factors without including someone who works on public health. We really try to bake all of these conversations into the methodology. Our focus is on Appalachia - that’s where I’m from.

DR: How does it feel for you to be working on issues that are close to home?

Thais: I’ve always felt passionately about improving conditions in Appalachia. That’s what inspired another machine learning project I’m working on to model region-specific opioid abuse behavior in Appalachia. I feel very lucky in how I grew up with parents who were able to help me get educated and participate in afterschool programs. Growing up in a rural area with lots of poverty, maybe I feel kind of guilty that so much of your trajectory in life is determined by things you can’t control. If I hadn’t had a household with two employed and involved parents, however smart I might think I am, my life would have ended up differently. So, this is a way I can give back. We must always remember to bring others along on our journey.

Do you have a story to tell about your own local engagement or of someone you know? Please submit your idea here , and we will help you develop and share your story for our series.