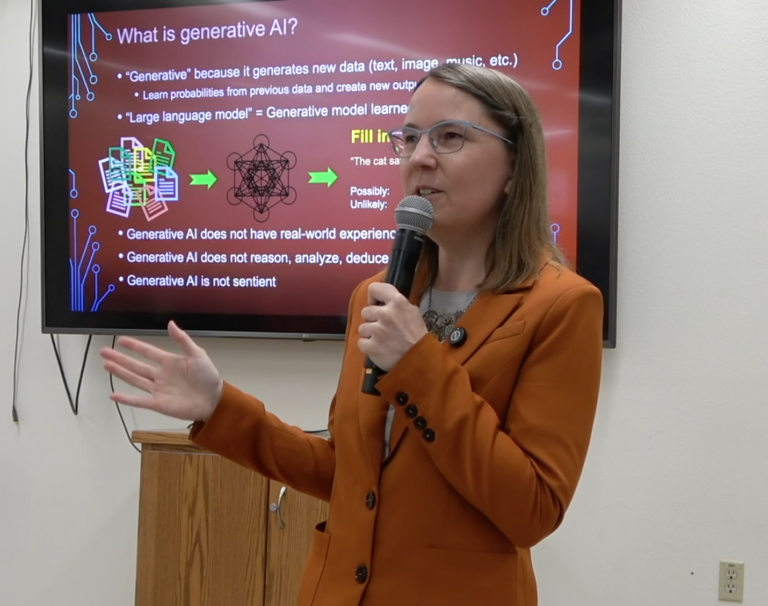

Kiri Wagstaff is a pioneering artificial intelligence researcher and educator whose career bridges machine learning, planetary science, and public policy. Wagstaff holds a Ph.D. and M.S. in computer science from Cornell University and spent a year as an AI subject matter expert in the U.S. Senate. She currently serves as a Special Advisor on Artificial Intelligence for the Oregon State University Libraries and teaches a graduate course on applying AI to real-world challenges. Before joining OSU, Dr. Wagstaff spent two decades at NASA’s Jet Propulsion Laboratory, where she developed machine learning systems that helped spacecraft explore and interpret data from across the solar system.

In her spare time, she brings AI literacy to the public, partnering with libraries, schools, and community groups to help people understand and confidently engage with artificial intelligence.

This interview has been edited for clarity and length.

JL: What inspired you to bring AI literacy programming into public libraries and other community “third spaces”?

Wagstaff: I’ve always loved teaching. And over the past four or five years, I’ve noticed that it’s not just computer science students who want to learn about AI, there’s a growing urgency among everyone to understand what it means and how it affects their lives. Expanding access to approachable AI education was a natural path, given my experience as an AI researcher.

In Congress, I had the opportunity to act as an AI subject matter expert within Senator Mark Kelly’s office. I helped identify emerging AI topics, and one of the areas he gravitated toward was AI literacy. We worked on a bipartisan bill called the Consumers LEARN AI Act to promote federal AI literacy initiatives. After so many important conversations on that topic, I didn’t want that momentum to fade when I returned to Oregon.

I connected with the librarians at Oregon State University who were in the process of creating an AI Literacy Center. They asked me to give an introductory public talk on basic AI literacy, after which I began receiving requests from public libraries and local groups to give similar talks on AI. Everyone, from retirement communities to Rotary Club meetings, is interested in learning about AI. The AI Literacy Center also arranged for me to speak with middle and high school teachers to help them engage their students in discussions about AI. It’s been really rewarding to see how much curiosity and enthusiasm there is across these different spaces. I encourage everyone to consider reaching out to their local community groups and offer their own expertise.

JL: How do you balance technical accuracy with accessibility for non-technical audiences?

Wagstaff: My goal isn’t to turn everyone into an AI expert. I want to help people feel confident making informed decisions about when and how to use AI in their own lives. So, I focus on the conceptual rather than the computational. It’s less about how AI works and more about what it can do – including its strengths and limitations. I also make a point to show that AI isn’t just generative tools like ChatGPT. Everyday technologies, like spam filters in email or Netflix show recommendations, are powered by AI too. That realization helps people see that AI, and related concerns about privacy and ethical use, has been part of their lives for years.

I usually find that the most meaningful moments come from Q&A and the conversations that follow. It often feels more like a fireside chat than a lecture. Many are worried about AI competing with their professional fields, which is understandable given the sensational headlines and AI’s rapid integration into our daily lives. Others are intrigued by how “supportive” it can seem as a companion. I remind them that the emotional connection in those interactions comes from them, not the AI. My goal is to empower people to use AI safely and effectively. That focus makes it easier to connect with and educate a general audience.

JL: You recently served as an AI subject matter expert in the U.S. Senate. What did you learn about how policymakers are approaching AI governance?

Wagstaff: When I was there in 2023-2024, it was a particularly interesting year because Congress felt enormous pressure to “do something” about AI. There were a lot of competing messages that made concrete legislation difficult. AI entrepreneurs like Sam Altman, who initially called for regulation, later warned it could stifle innovation. Some voices were sounding alarms about the risks of AI, while others argued that the U.S. needed to lead globally in its development. Any meaningful policy had to balance both perspectives.

Typically, Senate and House committees bring in three experts for a hearing on a given topic. But in recognition of the broad scope of AI impacts and the need for more expertise, the Senate decided to conduct nine “AI Insight Forums,” each focused on a specific area like elections, defense, or privacy. They brought in about twenty experts for each, who were quizzed by Senators in a lightning-round format. Those discussions led to a report outlining areas of bipartisan agreement that could form the foundation for legislation. Even so, only three AI-related bills passed that year. 2025 saw the passage of the TAKE IT DOWN Act, which allows individuals to request that platforms remove nonconsensual intimate visual materials, whether created by AI or not. This is a great example of how overlapping priorities can lead to action.

I came in as an AI expert, not a policy expert, so I had a lot to learn. Senator Kelly’s legislative aides were incredibly helpful in coaching me through the process of drafting legislation and meeting with other offices to build bipartisan consensus and support for a bill. I also learned a lot from a book on how to write legislation. The nonpartisan Library of Congress’s Congressional Research Service (CRS) was another great resource to locate data and lend context to help me, and Congress, do its job.

The bill I helped work on focused on consumer AI literacy. The goal was to help people make informed decisions about when and where to use AI in their daily lives. The idea was to create a coordinated federal strategy across agencies, with a public education campaign to share short, accessible “AI gems” that demystify the technology. We also wanted to make the goals actionable. For example, if an AI scribe is used in a nurse’s office, should patients consent to its use during their screenings? What do data and experience tell us about how AI affects patient privacy and rights? The bill aimed to provide that kind of practical guidance, developed collaboratively across agencies that each bring their own expertise. The bill was introduced in 2024 but didn’t receive a floor vote. It has been reintroduced in 2025 by a larger group of Senators, and I am excited about its prospects.

JL: Much of your earlier research at NASA JPL focused on real-world machine learning. What were some of the most exciting or unexpected applications?

Wagstaff: I was part of a group focused on developing machine learning techniques for space exploration. That often meant building intelligence into spacecraft that are so far away we can’t easily intervene—but AI can. We had to ask: How much can we trust AI on a spacecraft? What kinds of decisions should it be allowed to make? The key was finding tasks that could be safely automated without interfering with other critical operations and within a constrained computational environment. If an AI routine is running, that could mean that the rover can’t be driving. So, the focus is on maximizing the scientific return within those limits.

For example, a rover might only be able to send back ten images per day. AI can help decide which ten are the most valuable to transmit. One of my favorite applications was on the Mars Curiosity Rover, which has a laser spectrometer called ChemCam that analyzes the composition of rocks from a distance. JPL developed an AI system to decide which rocks were the most promising targets for that laser.

JL: How do you personally decide when AI shouldn’t be used?

Wagstaff: AI companies have done the public a bit of a disservice by presenting generative AI “chatbots” as if they work like a Google search. The responses feel like answers, but they’re really just sequences of words that sound plausible. Users are often puzzled when the same question receives a different answer the second time, or when a chatbot reverses its claims in response to a request for clarification. That baffled, frustrated feeling is a sign that we don’t have the right expectations in mind.

If I’m looking up a factual question, I would never use a generative AI chatbot. But, if I wanted to make a poem sound more like Robert Frost, it’s great for creativity and experimentation. The problem is that these models can’t fact-check themselves. And as search engines and generative AI continue to merge, it’s becoming harder to tell whether we’re seeing a real search result or a convincing fabrication.

We all have this fantasy of an all-knowing oracle, but that’s not what generative AI is or ever will be. It’s more like an improv artist. It can riff beautifully (and entertainingly), but it’s not an expert.

JL: What advice would you give to scientists interested in contributing to policy discussions around AI ethics and regulation?

Wagstaff: One of the most straightforward ways is by answering federally directed public information requests (RFIs). These call for feedback on specific topics—AI included—and anyone can respond. This year alone, there were two major requests on AI policy, and each one received over 10,000 public comments. You don’t have to be an expert; you just have to know they exist and take the time to share your perspective. It’s a powerful way for individuals to have a voice in how AI develops. RFIs are published in the Federal Register.

Public libraries also love this kind of programming, as well as most community spaces. You just have to reach out. Don’t assume people already have the expertise; many are eager to learn but don’t know where to start.

My advice is to start sharing your perspective wherever you can. Write an op-ed, give a local talk, or offer to speak with your nearby news outlet. Once people see your insights out there, they’ll start reaching out to you and it creates a kind of snowball effect. More opportunities to contribute will come naturally once you make your voice part of the conversation.

Learn more about Kiri on her website.

Do you have a story to tell about your own local engagement or of someone you know? Please submit your idea here , and we will help you develop and share your story for our series.